转载自:R语言轻松完成 网络爬虫(rvest和Rselenium) | 不知为不知

A. Rvest

1. Install

install.package('rvest')

# 想获取最新版,可以github安装

# install.packages("devtools")

devtools::install_github("hadley/rvest")2. Frame and Params

2.1 Read info from website

info1 <- read_html(url/pageSource)2.2 Node resolutions

html_node(x,css/xpath) / html_nodes(x,css/xpath)

# only one node:

html_node(x,css/xpath)

# 2 and more nodes:

html_nodes(x,css/xpath)

2.3 CSS VS Xpath

2.3.1 CSS

CSS selecter manual

在Chrome浏览器中,通过右击所需要查看的元素,单击“检查”,在开发者模式中,通过右击被蓝色覆盖(即被选中)的部分,单击Copy,单击Copy selecter,即可得到 css

2.3.2 Xpath

xpath manual

xpath experience notes

在Chrome浏览器中,通过右击所需要查看的元素,单击“检查”,在开发者模式中,通过右击被蓝色覆盖(即被选中)的部分,单击Copy,单击Copy Xpath,即可得到Xpath

xpath 中常用参数总结:

- “/“ 与 “//“ 的区别

“/“ 表示 当前结构下的次一级结构,”//“表示当前结构下的所有次级别结构(次一级,次二级…) - text()的使用

text()下提取的文本结果会被并列存放入一个变量中 - not()

满足 不 xxx 条件的子元素 - last()的使用

last() 可以定义某结构下的最后一个子元素 - @attribute=”xxx”的使用

定义 属性 attribute 为 xxx 的子元素

2.4 Info wget

2.4.1 html_text()

read_html() %>% html_node(css='p.blog-summary') %>% html_text()

[1] 摘要:

[2] 本文简单介绍循环神经网络RNN的发展过程,....

html_nodes(css='div.details.clear div.address p:nth-child(2)') %>%

html_text() %>% regmatches(.,regexpr('[0-9.\\-]+',.)) %>%

{if(length(.)==0) 'wu' else .}介于 p 节点 之间的 非html 语法的文本都会被提取

2.4.2 html_attr()

read_html() %>% html_node(css='span.name') %>% html_attr('href')

[1] '/u/3283d485c98a'3. Crawl cases

3.1 Functions

3.1.1 html_nodes

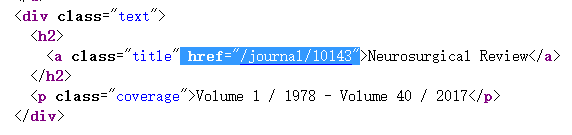

如果想获取下图中 被选中的信息 则

目标信息包含在 <a class=‘title name′> 下,可以通过 如果class=’title name’,可以用 html_nodes(‘a.title.name’)

web1 %>% html_nodes(css='a.title') %>% html_text()

[1] "International Journal"

[2] "Nano Reports"

[3] "Computational Social Networks"

[4] "Journal of Family Voilences"

... ...3.1.2 html_attr

获取 被选中 信息,可运行

web1 %>% html_nodes(css='a.title') %>% html_attr('href')

[1] "/journal/10143" "/journal/10144" "/journal/10145"

[4] "/journal/40134" "/journal/40149" "/journal/58521"3.1.3 html_table

library(magrittr)

Library(rvest)

url1 <- 'https://amjiuzi.github.io/2017/08/13/ggradar/'

read_html(url1) %>% html_table() %>% extract2(3) #提取第3张表

type price price2 allowance YouHao

1 bought 4.122 4.109 4.139 4.122

2 considered 4.109 4.108 4.133 4.109

3 NoInterest 4.126 4.125 4.107 4.1263.2 Samples

3.2.1 Sample 1

library(rvest)

library(stringr)

rate_links1 <- str_c(bas_info1[,3],'collections') # 生成url列表

rating_cnt <- lapply(rate_links1,

function(x){Sys.sleep(1);

tryCatch(read_html(x)%>%

html_nodes(css="span#collections_bar span") %>%

html_text() %>% str_extract("[0-9]+")%>%

as.numeric(),

error=function(e)e)})

# -----------------------------

danpin_info <- function(x) {

class1 <- x %>% html_node('h3') %>% html_text() %>% as.character()

danp_name <- x %>% html_nodes('div div h3') %>%

html_text() %>% as.character()

danp_sales <- x %>% html_nodes(css = 'span.color-mute.ng-binding') %>%

.[seq(1:length(.))%%2==0] %>% html_text() %>%

regmatches(.,regexpr('[0-9]+',.)) %>%

as.numeric()

# length(class1)=1 but length(danp_name)= n(>1)

data.frame(class1,danp_name,danp_price,danp_sales)

}

goods <- read_html(page1) %>%

html_nodes(css ='div.shopmenu-list.clearfix.ng-scope')

danpin1 <- do.call(rbind,lapply(goods,danpin_info))B. Rselenium

Rselenium较rvest复杂,涉及rvest暂无法实现的动态抓取时可以配合rvest使用

1. Install

1.1 JDK install

1.2 Selenium (windows os)

selenium download page

linux or mac os, be free to find solution by internet

1.3 Browser support

firefox + geckoDriver

chrome + chromeDriver

Unzipped Driver should be loc in firefox_installed filepath (same path as firefox.exe)

better to set firefox installed path into path(envirenment variable)

2. Frame and Params

2.1 Start selenium server

win + x -- powershell(admin)

java -jar xxx/selenium-server-standalone-xxx.jar2.2 Start internet

library(RSelenium)

remDr <- remoteDriver(remoteServerAddr='localhost',port=4444L,

browserName='chrome')

remDr$open(silent = TRUE)

Url='https://movie.douban.com/tag/#/'

remDr$navigate(url)

# remDr$refresh() # refresh page

# remDr$goBack()

# remDr$getCurrentUrl()

# remDr$goForward()2.3 Crawl

element1 <- remDr$findElements(using = 'css','') # html_node(css='')

element1 <- remDr$findElements(using = 'xpath','') # html_node(xpath='')

element1$getElementText() # html_text()

element1$getElementAttribute('href') # html_text()

2.4 Events

element1 <- remDr$findElement(using = "xpath", "/html/body/div[3]/div[2]/div[2]")

remDr$click(2) # 2 indicates click the right mouse button

element1$clickElement() # just click

element1$clearElement() # clear input-box before input

element1$sendKeysToElement(list('R cran')) # just input

element1$sendKeysToElement(list('R cran',key ='enter')) # input and click

element1$setElementAttribute("class",'checked') # select checkbox1

element1$sendKeysToElement(list(key ='space')) # select checkbox2

# --- zhankai/shouqi per store

zhankai1 <- remDr$findElements(using = 'css',

'ul > li > div.info > div > div.more')

lapply(zhankai1,function(x) x$clickElement())

# --- windows switch

currWin <- remDr$getCurrentWindowHandle()

allWins <- unlist(remDr$getWindowHandles())

otherWindow <- allWins[!allWins %in% currWin[[1]]]

remDr$switchToWindow(otherWindow)2.5 window switch

currWin <- remDr$getCurrentWindowHandle()

allWins <- unlist(remDr$getWindowHandles())

otherWindow <- allWins[!allWins %in% currWin[[1]]]

remDr$switchToWindow(otherWindow)2.6 frame

## Switch to left frame

frameElems <- remDr$findElements(using = "tag name", "iframe")

sapply(frameElems, function(x){x$getElementAttribute("src")})

remDr$switchToFrame(frameElems[[1]])

page1 <- remDr$getPageSource()[[1]]

name1 <- read_html(page1) %>%

html_nodes(xpath = '//select[@name="fundcode"]/option') %>%

html_text()2.7 Shutdown internet

remDr$close() # shutdown internet3. Some Solution in Use

3.1 Page up and down

user0 <- c()

rev0 <- c()

html0 <- c()

while (page.previous != page.current) {

... ...

page.previous <- remDr$findElement('css','em.current')$getElementText()[[1]] %>% as.numeric()

next1 <- remDr$findElement('css','a.next_page')

next1$clickElement()

Sys.sleep(3)

page.current <- remDr$findElement('css','em.current')$getElementText()[[1]] %>% as.numeric()

}

## ------- or find next

next1 <- remDr$findElement(using = 'css','ul.pagination.clear li:last-child')

next1$clickElement()3.2 Find the end and up_arrow/down_arrow

library(RSelenium)

library(rvest)

for (i in 1:5) {

pagedown <- remDr$findElement('css','body')

pagedown$sendKeysToElement(list(key='end'))

Sys.sleep(3)

... ...

next1 <- remDr$findElement('css','a.fui-next')

next1$clickElement()

Sys.sleep(3)

}

remDr$sendKeysToActiveElement(list(key = 'down_arrow', key = 'down_arrow', key = 'enter'))

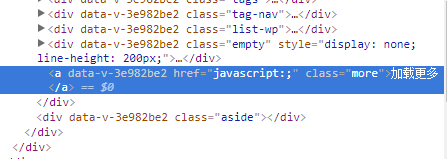

remDr$sendKeysToActiveElement(list(key = 'up_arrow', key = 'up_arrow', key = 'enter'))3.3 Find more

i=0

while (i <= 10000) {

tryCatch(remDr$findElement('css','div.article a.more')$clickElement(),

error=stop(simpleError("All.Pages.Shown")))

Sys.sleep(2)

i = i+1

}3.4 title-1 vs info-n

## ------ store info function (title-1)

store_info <- function(x) {

store_url <- x %>% html_node('div.info a') %>%

html_attr('href') %>% as.character() %>%

{if(substr(.,1,2)=='//') paste('https:',.,sep='') else .}

... ...

danpins <- x %>% html_nodes('div.info div.other a')

danpin <- do.call(rbind,(lapply(danpins,danp_detail)))

data.frame(cbind(store_urldanpin))

}

## ------ danpin details function (info-n)

danp_detail <- function(x) {

danp_name <- x %>% html_nodes('div h4') %>%

html_text() %>% as.character() %>% {if(length(.)==0) 'wu' else .}

... ...

data.frame(cbind(danp_name...))

}

## ------ crawl and merge

res0 <- data.frame()

for (i in 1:pages1) {

# show all info

zhan1 <- remDr$findElements(using = 'css',

'ul > li > div.info > div > div.more')

lapply(zhan1,function(x) x$clickElement())

page1 <- remDr$getPageSource()[[1]]

dian1 <- read_html(page1) %>%

html_nodes(css='ul.list-ul > li')

res1 <- data.frame(do.call(rbind,lapply(dian1,store_info)))

res0 <- rbind(res0,res1)

print(paste('Page ',i,' is over',sep=''))

Sys.sleep(5)

}

dim(res0)3.5 trycatch

skip_to_next <- FALSE

for (i in 1:10) {

tryCatch(print(b), # {functions in trycatch}

# catch error

error = function(e) { print("hi");

skip_to_next <<- TRUE})

# if error then next

if(skip_to_next) { skip_to_next <- FALSE;next }

}3.6 download pics

way1 :

dir.create('./imgs')

imgs1 <- unique(res0$img1)

for (i in 1:length(imgs1)) {

tryCatch({

download.file(as.character(imgs1)[i],

paste0('./imgs/',

tail(unlist(strsplit(as.character(imgs1)[i],'/')),1)),

mode = 'wb')

print(paste('Page',i,'of',length(imgs1),'done !!'))

#Sys.sleep(5)

},

error = function(e) { print("hi"); skip_to_next <<- TRUE})

if(skip_to_next) { next }

}way2 :

library(rvest)

library(httr)

for(i in 1:nrow(df0)) {

sess <- html_session(df0$url2[i])

imgsrc <- sess %>%

read_html() %>%

html_node(xpath = '//*[@id="pageBody"]/div/a/img') %>%

html_attr('src')

if (is.na(imgsrc)) {

print('hi');next

} else {

img <- jump_to(sess, paste0('https://content.sciendo.com', imgsrc))

# side-effect!

writeBin(img$response$content,

paste0(tail(unlist(strsplit(df0$url2[i],'/')),1),'.jpg'))

print(paste(i,'of',nrow(df0),'done !!'))

}

}3.7 how to save time from page loading

remDr$open()

remDr$setTimeout(type = "implicit", milliseconds = 3000)and f12 – network – find the most time wasting url – right-click – block request url/block request domain

C. Mixture of Rselenium/rvest

library(RSelenium)

library(rvest)

remDr <- remoteDriver(remoteServerAddr='localhost',port=4444L,

browserName='chrome')

remDr$open(silent = TRUE)

Url='https://movie.douban.com/tag/#/'

remDr$navigate(url)1. by rselenium

remDr$findElement('css','xxxx')[[1]]$getElementText()[[1]]2. by rvest

websrc <- remDr$getPageSource()[[1]]

read_html(websrc) %>% html_nodes(css='xxx') %>% html_text()rvest is more simple and flexible than rselenium,so most of time we choose rvest solution

D. Info cleanning after crawl with stringr

library(stringr)

lapply(bas_info1[,3],

function(x){Sys.sleep(2);

tryCatch(read_html(x)%>%html_nodes(css="div.rating_self.clearfix")%>%

html_text() %>% str_trim() %>% # trim掉前后空格

str_replace_all(' ','')%>% # 替换夹杂在文本中的空格

str_split("\n")%>% # 按照'\n'拆分文本

unlist()%>%.[c(2,7)]%>%

str_extract('[0-9.]+'), # 提取文本中的数字和'.'

error=function(e)e)})E. Processing Bar

n <- length(target)

pb <- winProgressBar(title = "Progress Bar", min = 0,

max = n, width = 300)

for (j in 1:n){

info1 <- read_html(prov_url1[j]) %>%

html_table(header = 1) %>% do.call(rbind,.)

info0 <- rbind(info0,info1)

setWinProgressBar(pb, j, title='Crawl Process Bar',label=paste( round(j/n*100, 0),

"% done"))

}

close(pb)